Control Alone Is Not Strategy

For decades, Intel wasn’t just another semiconductor company. It was the company that made computing happen. Intel didn’t follow Moore’s Law. It invented it, championed it, and kept it alive for the better part of half a century.

Every 18–24 months, the number of transistors on a chip doubled. That doubling was engineering precision, and Intel was the undisputed master of it.

While others were designing chips and sending them overseas to be manufactured, Intel did both. It created the instruction sets (x86). It designed the architectures (Core, Xeon, Atom). It built the transistors. It innovated with FinFETs, high-k metal gates, strained silicon, and self-aligned double patterning — all decades before most people heard of TSMC or ARM. And crucially, it owned the entire stack:

Design + Fabrication = Full-Stack Control.

This vertical integration was Intel’s crown jewel. It enabled tighter feedback loops, faster optimization, and power-efficiency breakthroughs that made Intel chips the standard in every PC, every server, every laptop.

But then, at 10 nanometers, everything broke.

What is a nanometer, anyway?

It’s a marketing term today, but in technical terms, it refers to the smallest process feature on a chip — usually the gate length of a transistor or metal pitch.Shrinking the node (from 14nm to 10nm to 7nm) lets chipmakers cram more transistors onto a chip, boosting performance, reducing power consumption, and cutting costs per operation.

And for over 30 years, Intel led this race. It didn’t just follow node shrinks — it defined them.

Until it didn’t.

In 2015, Intel promised the launch of its next-generation 10nm process. It was supposed to be the most advanced node ever: more density, lower leakage, higher performance. But it never arrived.

Intel’s 10nm hit a wall.

Its overreliance on multi-patterning — complex layering and etching techniques to simulate smaller features without EUV lithography — caused delays, manufacturing errors, and abysmal yields. While TSMC adopted EUV early and leapfrogged to 7nm, Intel was stuck trying to debug a broken process.

What made it worse was Intel’s own strength: vertical integration. Because design and fab were deeply coupled, a delay in one stalled the other. CPU teams couldn’t ship without a stable process. Fab teams couldn’t simplify without reworking the architecture. The legendary feedback loop had become a bottleneck.

Meanwhile, the world changed.

Apple, frustrated with delays and thermal issues in Intel-powered Macs, made a bold move: they built their own ARM-based SoC (M1) using TSMC’s 5nm node. Overnight, Apple redefined what laptops could be — fanless, cool, fast, and energy efficient. Intel had nothing comparable.

Nvidia, once a gaming chip company, became the default platform for AI. Its GPUs powered every training run from GPT to Stable Diffusion. CUDA, its developer stack, became a walled garden. And unlike Intel, Nvidia never built fabs — it rode TSMC’s node leadership to scale faster.

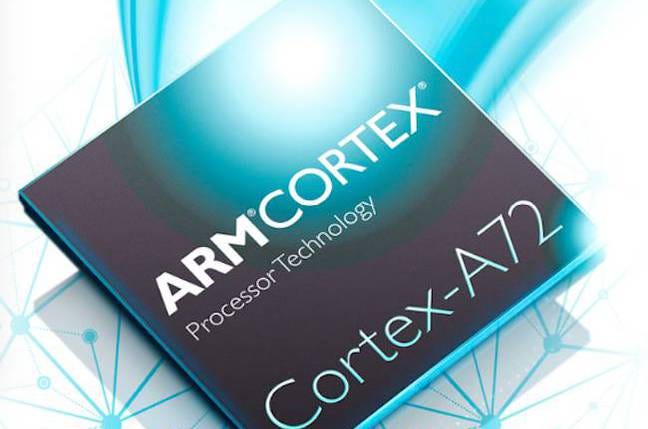

ARM, once laughed off as mobile-only, started winning cloud and data center deals — with Amazon’s Graviton, Apple Silicon, and soon Qualcomm Nuvia cores on the roadmap.

TSMC, once Intel’s junior in fabs, now runs the world’s most advanced manufacturing line — building chips for Apple, Nvidia, AMD… and now even Intel itself. Yes — the once-great vertically integrated empire now outsources 30% of its CPU production to its former competitor.

So what happened?

Intel didn’t fall because it stopped hiring smart people. It didn’t run out of capital or ideas. It fell because it missed an inflection point.

It over-optimized for a world where general-purpose compute, monolithic chips, and internal fabs defined the winners. But the future was modular, mobile, power-efficient, AI-first. Intel bet on control. The winners bet on adaptability.

In this blog, I will break down how Intel lost its edge:

Act I: The Empire: How Intel’s vertically integrated model gave it decades of dominance — and how that same model became a bottleneck at 10nm.

Act II: The Uprising: How TSMC, Nvidia, Apple, and ARM capitalized on modularity, fabless scaling, and strategic bets that Intel never saw coming.

Act III: The Missed AI Century: How Intel failed to turn its Gaudi, Habana, and OpenVINO assets into an AI platform — and why Nvidia became the default for the next decade of compute.

And finally, what every AI founder, CTO, and strategist must learn from this story — because in today’s compute economy, advantage compounds fast. And irrelevance hits even faster.

Act I: Intel’s Masterstroke Becomes Its Achilles’ Heel

By the mid-90s, Intel wasn’t just designing microprocessors — it was architecting the future of compute itself. Every laptop, every desktop, every data center rack ran on an Intel chip. It defined the x86 architecture, dictated the cadence of transistor shrinks, and effectively set the pace for the rest of the semiconductor industry. And it did so by building what no one else could: the world’s most tightly integrated compute stack.

Intel didn’t outsource. It didn’t wait. It innovated at every layer:

It invented process technologies — FinFETs, strained silicon, high-k metal gates — long before they became industry norms.

It owned the architecture stack — from the Pentium to Xeon, from Core to Atom.

It ran its own fabs — pouring billions into cutting-edge facilities while competitors remained fabless.

And crucially, these weren’t siloed. Design and manufacturing co-evolved, optimizing together. Intel’s architectural teams could iterate hand-in-hand with its fabrication engineers, creating tight feedback loops that unlocked massive gains in power efficiency, thermal performance, and transistor density. This wasn’t just vertical integration — this was strategic compounding.

From 1995 to 2015, Intel’s dominance looked unshakeable. It consistently led in both transistor performance and volume manufacturing, beating AMD, IBM, and every would-be rival into irrelevance. Even Apple, Google, and Amazon were once mere customers of Intel’s roadmap.

But it was precisely that roadmap — rigid, linear, insular — that became its downfall.

The 10nm Wall

Intel’s long-predicted disaster didn’t come from a weak product or a bad quarter. It came from a seemingly small miscalculation: the path to 10nm.

Instead of adopting EUV lithography — which simplifies chip manufacturing using extreme ultraviolet light — Intel stuck with multi-patterning on its deep UV tools. That meant using multiple complex masks to simulate smaller features, pushing 193nm immersion scanners far past their limits.

On paper, it was clever. In practice, it was a manufacturing nightmare.

Yields plummeted: Intel couldn’t produce enough working dies to justify scale.

Delays mounted: What was promised for 2016 didn’t arrive in volume until 2019.

Designs stalled: Intel’s CPU teams couldn’t tape out new chips without a reliable process.

And because Intel owned both design and fab, everything was tightly coupled. The architecture team couldn’t pivot to a different node. The fab team couldn’t move forward without redesigned parts. This was no longer a feedback loop. It was a deadlock.

Meanwhile, the World Moved On

As Intel’s 10nm effort spiraled, the rest of the industry quietly decoupled — and leapfrogged.

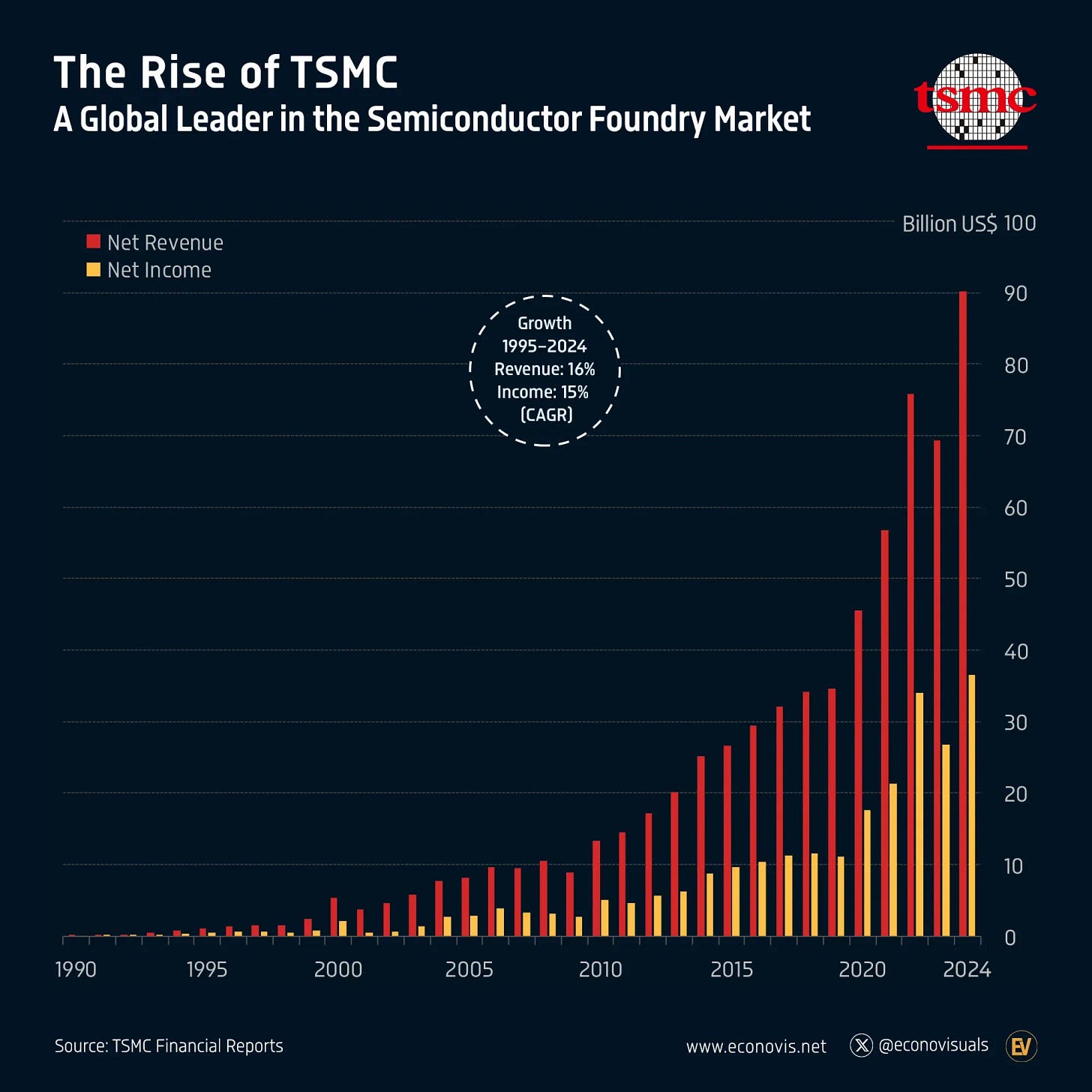

TSMC doubled down on EUV and raced ahead to 7nm and 5nm. It became the default fab partner for Apple, AMD, Nvidia, and Qualcomm.

Apple designed its own chips — first for iPhones, then for Macs — using TSMC’s latest nodes. The result? M1 and M2 chips that ran cooler, faster, and longer than anything Intel could offer.

Nvidia focused entirely on AI workloads and accelerated compute. By outsourcing to TSMC, it avoided the fab headaches and scaled faster.

ARM’s energy-efficient architectures began to eat into server markets, with Amazon building custom Graviton chips and others following.

Intel, the master of full-stack control, now found itself outmatched at every layer.

The Strategic Error

Intel’s bet wasn’t just technical. It was cultural and strategic. It believed that control over the entire stack — process, architecture, and packaging — would always be an advantage.But in a world shifting toward heterogeneity, modularity, and AI-first workloads, that bet no longer paid off.

Monolithic dies became bottlenecks. AMD went chiplet. Intel couldn’t — not without overhauling its entire architecture.

Power efficiency became the new frontier. Apple and ARM soared. Intel lagged behind.

AI demanded specialized silicon. Nvidia rode the GPU wave. Intel was stuck debating x86 vs. Gaudi vs. Habana.

And all the while, hyperscalers — the most valuable customers in the compute economy — started building their own chips.What made Intel great was now holding it back. Integration had become inertia.

Act II: The Foundry Flips and the Rise of Modular Compute

While Intel was trapped in a 10nm deadlock, the rest of the industry wasn’t just catching up. It was re-architecting the entire compute landscape around different assumptions: modularity, flexibility, and external partnerships instead of internal control.

TSMC: From Vendor to Kingmaker

For decades, TSMC was viewed as a second-tier player. It made chips for others but rarely dictated the roadmap. That changed when it embraced extreme ultraviolet (EUV) lithography earlier than anyone else. While Intel was doubling down on complex multi-patterning, TSMC was quietly mastering simpler, scalable manufacturing at 7nm and 5nm.

More importantly, TSMC became the neutral Switzerland of silicon. It didn’t compete with its customers. It empowered them. Apple, Nvidia, AMD, Qualcomm — they all handed over their designs, and TSMC delivered on time, on budget, on the best process nodes available. Fabless players could move faster because they weren’t carrying fab risk. TSMC made that possible.

Apple: The Vertical Challenger

Apple didn’t just ditch Intel chips. It redefined what performance meant. For years, Intel had trained the world to measure CPUs in clock speeds and core counts. Apple flipped the script. The M1 and M2 chips weren’t just fast — they were power-efficient, fanless, and tightly integrated with software. By designing its own ARM-based SoCs and outsourcing production to TSMC, Apple gained complete control over user experience without any of the manufacturing baggage. Intel lost its place in MacBooks not because it had bad chips, but because Apple had a better system-level vision.

Nvidia: The Platform Play

Nvidia started out as a gaming chip company. It ended up owning the AI boom. The key wasn’t just its GPU hardware — it was CUDA. By creating a proprietary developer stack that was easy to adopt and hard to leave, Nvidia became more than a chipmaker. It became a platform. As deep learning workloads exploded, every research lab and AI startup defaulted to Nvidia. Even cloud providers built their GPU fleets around it. And crucially, Nvidia didn’t own any fabs. It piggybacked on TSMC’s leading-edge nodes and focused all its energy on architecture and software. The same flexibility Intel gave up, Nvidia weaponized.

ARM and the Cloud Shift

Once seen as mobile-only, ARM became the silent disruptor of cloud computing. Hyperscalers like Amazon, Microsoft, and Google didn’t want to rely on Intel anymore — they wanted customization, efficiency, and better economics. ARM delivered. Amazon’s Graviton chips proved that general-purpose cloud workloads could run faster and cheaper on ARM than on x86. Google began exploring custom TPU architectures. Microsoft backed Ampere. None of these players needed to wait for Intel’s roadmap anymore. They could build their own.

Intel: A Giant Without Leverage

By the early 2020s, Intel was no longer the axis around which the industry spun. It had lost its manufacturing lead. It had lost the design mindshare. It had lost its biggest customers — not to direct rivals, but to structural shifts it didn’t prepare for. And ironically, the thing that made Intel great — full-stack control — left it unable to adapt. While AMD went chiplet, Intel remained monolithic. While others leaned on TSMC, Intel tried to save its in-house fabs. While Nvidia doubled down on AI software, Intel was still optimizing general-purpose compute.

What once looked like strength had become rigidity. Everyone else moved to a new game. Intel kept trying to win the old one.

Act III: When the World Runs on AI, What Happens to Intel?

By the mid-2020s, a strange shift had taken place. For the first time in decades, the center of gravity in computing wasn’t inside Intel’s roadmap. It was outside it.Nvidia’s GPUs had become the de facto platform for AI. TSMC was setting the pace for manufacturing. And hyperscalers like Amazon, Google, and Microsoft weren’t just buying chips — they were designing their own. Intel, the company that once symbolized the future of computing, was no longer in the driver’s seat.

The Wake-Up Call

The numbers didn’t lie. Intel’s datacenter market share had been shrinking for years. In AI, it had virtually none. Its Gaudi and Habana accelerators were rarely mentioned in the same breath as Nvidia’s H100s or AMD’s MI300s.

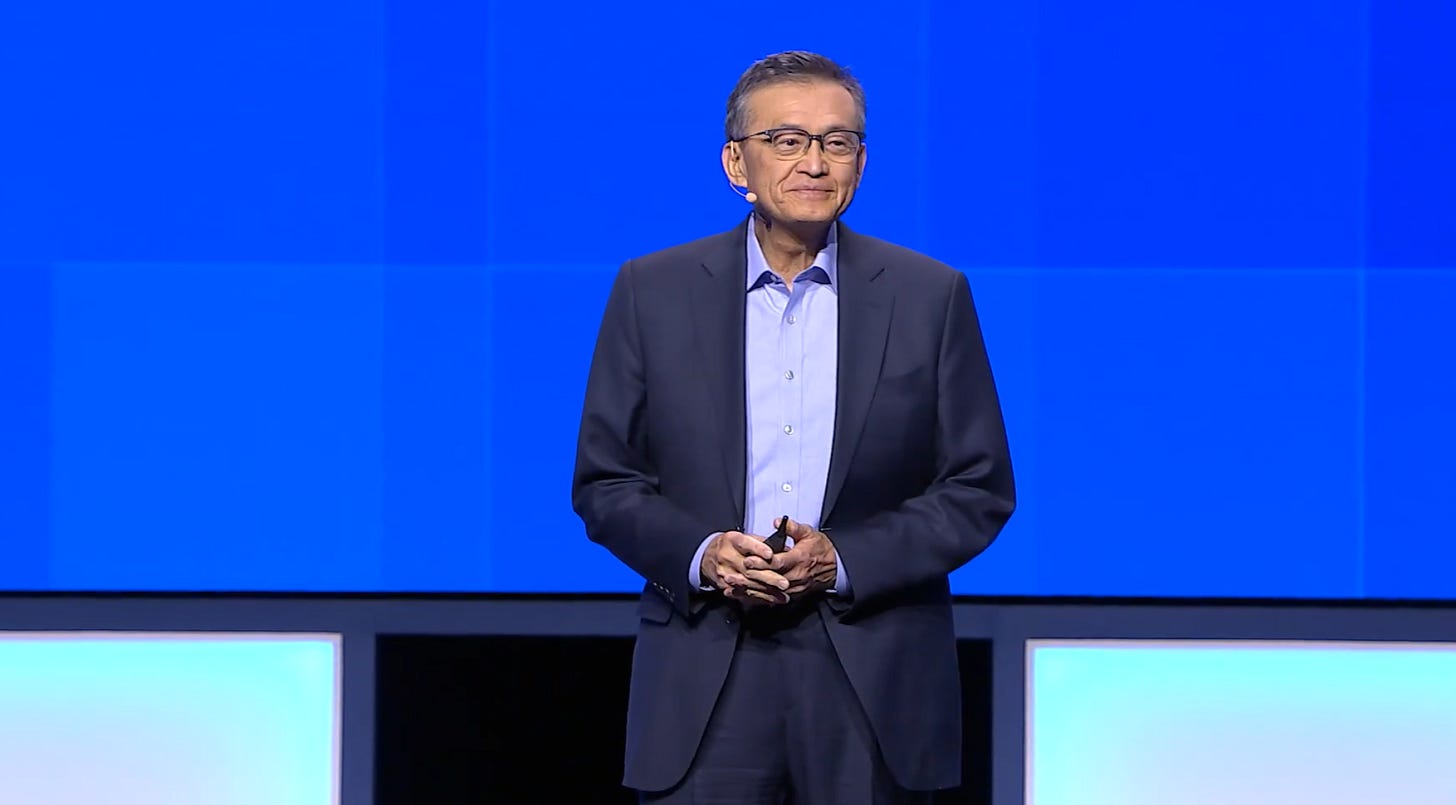

In 2025, as pressure mounted, Intel made a dramatic move. Pat Gelsinger stepped down as CEO. In his place came Lip-Bu Tan, a legendary semiconductor investor and operator with a reputation for bold bets. Internally, the mood was clear. “This is our last shot,” said one senior Intel engineer.

“Either we rebuild the machine for an AI-first world — or we get left behind permanently.”

Tan didn’t waste time. He flattened bloated org charts, brought in top AI talent, and carved out new product groups focused on modularity and openness. No more monolithic bets. No more delays pretending to be strategy. But while change was happening at the top, the real challenge was deeper — cultural, not technical.

A Culture Trapped in Its Own Greatness

Intel had spent decades dominating through control. It controlled the node, the fabs, the architecture, the packaging, even the compiler stack. That playbook doesn’t work in an AI world.

Modern AI systems are built like living organisms — stitched together from open-source models, accelerators, software stacks, and data pipelines. They are agile, heterogeneous, and evolve in real time. No single vendor owns it all. No single roadmap defines the field. And yet, Intel had spent the better part of a decade trying to make the world adapt to its roadmap, rather than adapting its roadmap to the world.

“Intel didn’t miss AI because it lacked good engineers,” said a former product leader. “It missed it because it kept believing the next great CPU would somehow matter more than the next great platform.”

The truth was, platforms had moved on.

Nvidia wasn’t just selling GPUs. It was selling CUDA — a whole ecosystem of software, libraries, developer tools, and frameworks. Apple wasn’t just selling chips. It was redefining the hardware-software boundary. Amazon wasn’t just designing silicon. It was reshaping the economics of cloud-based inference.

Intel? It was still benchmarking single-thread performance.

2025 and Beyond: Is a Comeback Even Possible?

Even today, Intel is far from broken. It still has unmatched engineering talent, deep IP reserves, and the potential to be the connective tissue of the AI era. The question is whether it can turn loss into leverage.

To do that, Intel must stop trying to own every part of the machine. Instead, it must become the platform upon which others build.

Here’s what that looks like in practice:

Become the most developer-friendly AI provider

Serve as a neutral chiplet marketplace

Open up chip production and IP licensing

Focus on software-first strategies

Fix the culture

And yes, there’s unlikely proof—outside Reddit—that someone really dumped a nana’s inheritance into Intel:

“I put 700 k of grandma’s inheritance into Intel stock at market open… I’m going to hold it for a decade,” wrote one Redditor, earning a spot in WallStreetBets’ lore

The details may be fuzzy, but the thesis was not: someone with nothing to lose believed in Intel’s turnaround—even when everyone else had given up. It sounds like a meme. But in semiconductor history, comebacks are built by believers daring to see beyond the ink stains and benchmarks. AMD’s turnaround in 2016 looked equally crazy at the time—until it wasn’t.

Intel’s potential resurgence won’t hinge on one perfect chip. It will depend on whether the company stops pretending it can do everything and starts enabling everyone else to do more.

Because in an AI world, you don’t win by owning the stack. You win by helping others build it better. And if you’re looking to go deeper into how compute, strategy, and silicon collide in the age of AI, head over to digdeeptech.com — where I break down the tectonic shifts shaping tomorrow’s tech economy.